The oversight of verified datasets associated with identifiers such as 943005621, 470588800, 8004439164, 8662930076, 1254612500, and 601601651 reveals significant implications for data accuracy and integrity. A systematic approach is necessary to address the inherent challenges in data verification, including inconsistencies and integration issues. Establishing a governance framework may provide the foundation for improved oversight, yet questions remain about the effectiveness of such measures in practice.

Importance of Verified Datasets

The significance of verified datasets cannot be overstated, as they serve as the foundation for informed decision-making across various sectors.

Ensuring data accuracy and maintaining dataset integrity are crucial for fostering trust and enabling organizations to act decisively.

Without verified datasets, the potential for erroneous conclusions increases, undermining the freedom to innovate and adapt to changing circumstances in a data-driven world.

Overview of Unique Identifiers

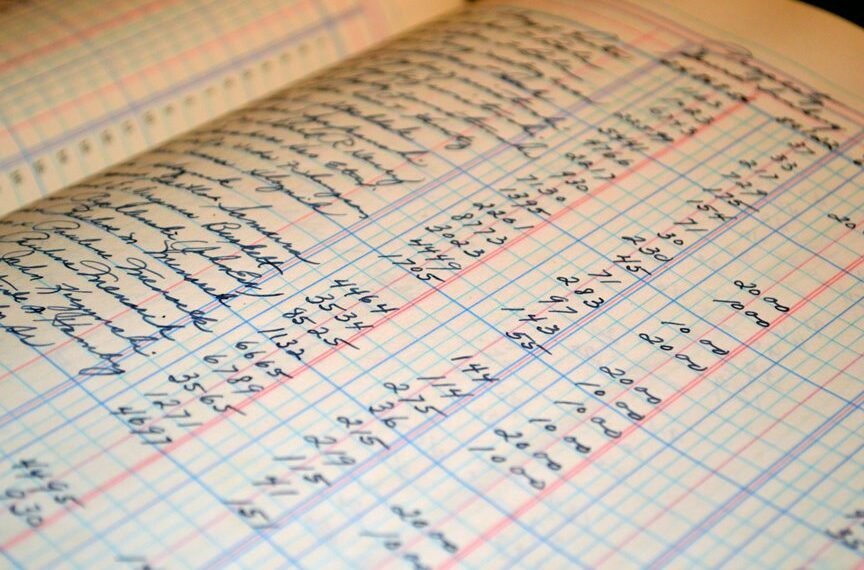

Verified datasets often rely on unique identifiers to enhance data accuracy and facilitate effective organization.

These identifiers serve as distinct markers that preserve data integrity, ensuring that each entry is traceable and unambiguous.

By employing unique identifiers, organizations can streamline data management processes, reduce errors, and improve overall data quality.

This systematic approach ultimately supports informed decision-making and promotes data-driven initiatives.

Challenges in Data Verification

How can organizations effectively navigate the myriad challenges associated with data verification? Ensuring data integrity is paramount, yet verification processes often encounter obstacles such as inconsistent data sources, varying standards, and the complexity of integrating disparate systems.

These challenges can compromise accuracy and reliability, necessitating a strategic approach that prioritizes effective methodologies to enhance and streamline verification efforts for better outcomes.

Best Practices for Data Oversight

Navigating the challenges of data verification necessitates a robust framework for data oversight.

Effective data governance should prioritize transparency and accountability, ensuring adherence to compliance standards. Organizations benefit from regular audits, risk assessments, and clearly defined policies.

Establishing a culture of continuous improvement fosters resilience, empowering teams to adapt to evolving regulatory landscapes and enhancing overall data integrity while promoting individual autonomy.

Conclusion

In conclusion, the integrity of verified datasets tied to identifiers such as 943005621 and 470588800 hangs in the balance. As challenges in data verification loom, the implementation of a robust governance framework becomes not just beneficial but imperative. Will organizations rise to the occasion, addressing the complexities of integration and inconsistent sources? The future of data-driven initiatives may depend on their ability to foster transparency and accountability, ultimately determining the quality and trustworthiness of these critical datasets.