The validation of cross-system identifiers, such as 18009432189 and 961122901, requires a meticulous approach to ensure data integrity. Each identifier presents unique challenges that necessitate targeted validation techniques. Discrepancies in format and structure can lead to significant errors. Understanding these issues is critical for enhancing interoperability across platforms. The implications of these findings may surprise those unacquainted with the complexities involved.

Overview of Identifier Validation Techniques

Identifier validation techniques serve as critical mechanisms in ensuring data integrity across systems.

They employ various identifier formats and validation algorithms to verify the authenticity and consistency of data inputs. By systematically checking these identifiers against predefined criteria, organizations can minimize errors and enhance interoperability.

Such rigorous validation processes contribute significantly to maintaining the freedom and reliability of data management practices across diverse platforms.

Detailed Analysis of Each Identifier

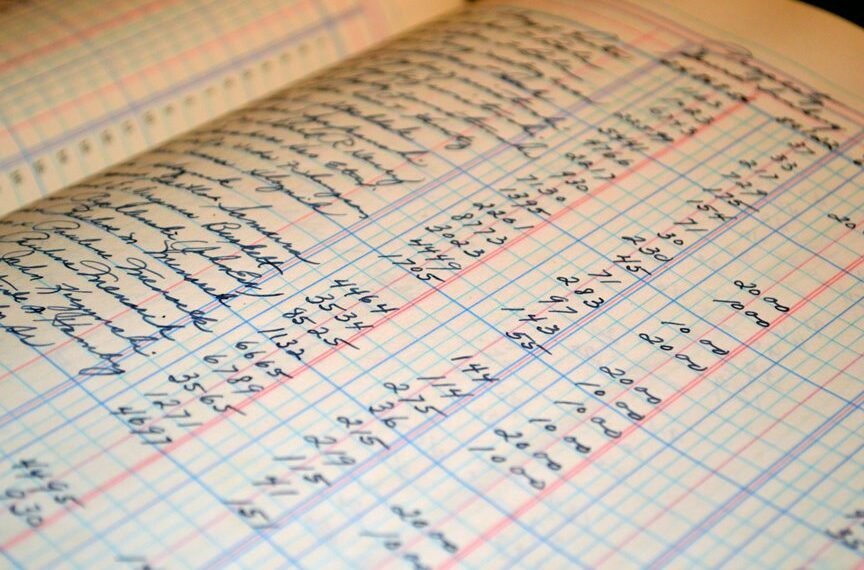

A comprehensive examination of various identifiers reveals the distinct characteristics and validation requirements essential for effective data management.

Different identifier formats, such as numeric and alphanumeric, necessitate specific validation methods to ensure integrity and accuracy.

Each identifier’s structure influences its usability across systems, highlighting the importance of stringent validation protocols to maintain data consistency and facilitate seamless integration among diverse platforms.

Identifying Common Discrepancies

Discrepancies often arise from variations in identifier formats and validation methods across different systems.

Common discrepancy types include formatting inconsistencies, leading zeros, and differences in data entry standards. These issues frequently lead to validation errors, complicating data integration.

Addressing these discrepancies is crucial for maintaining accurate records and ensuring seamless interoperability among systems, thus enhancing the overall reliability of identifier validation processes.

Best Practices for Ensuring Data Integrity

Ensuring data integrity requires the implementation of systematic practices that promote consistency and accuracy across all systems involved.

Effective data cleansing processes are essential, as they eliminate inaccuracies and redundancies. Additionally, establishing robust validation rules ensures that data meets predefined standards before entry.

These practices not only enhance reliability but also empower organizations to maintain high-quality data for informed decision-making and operational efficiency.

Conclusion

In conclusion, the thorough validation of cross-system identifiers such as 18009432189 and 961122901 is imperative for maintaining data integrity. By employing contemporary validation techniques akin to a well-oiled Victorian machine, organizations can effectively address discrepancies and enhance interoperability. The insights from this review underscore the necessity of adopting best practices, ultimately fostering a more reliable and efficient data exchange environment. Emphasizing these protocols ensures that decision-making processes remain robust and informed in an increasingly digital landscape.